I blogged here about the data centre investment boom due to the exuberance surrounding AI.

AI appears set to become the defining General Purpose Technology of the next 20-30 years or more. It has already become the “world’s digital assistant”, with the share of non-work-related messages increasing and now (June 2025) making up 73% of all usage.

Second is writing tasks, such as editing, critiquing text or language translation. Seeking information has grown more rapidly than any other category over the past 12 months. ChatGPT is now regularly being used to look for recipes, products, people and current events, in a direct threat to traditional search engines. Multimedia use has also grown, with a large rise in April 2025 following the release of new image generation capabilities.

This is a profile of AI usage in India, which is predominantly focused on software development and computer programming.

UAE and Singapore top in AI usage globally.

Interestingly, the FT feature also points to concerns about the impact of AI usage on people’s cognitive abilities, especially in their ability to think critically.

Data scientist Austin Wright likened it to the diminished sense of direction that has followed the widespread adoption of satellite navigation systems. A recent paper by Nataliya Kosmyna at MIT’s Media Lab assessed the impact of using an AI assistant for essay writing. The experiment split users into three groups: the first could use ChatGPT for assistance, the second could use a search engine (but with no AI overview) and the third could rely on nothing more than brain power. The findings concluded that using an LLM in an educational context could lead to the accumulation of “cognitive debt”and a “likely decrease in learning skills”. It also found that LLM users struggled to quote accurately from their work and often felt no ownership of the finished essay.

Generative AI is clearly in its early stages, and the debates about its impact in terms of economy-wide productivity increases, profit generation, and job displacement are natural.

Two undisputed early trends on AI are that the technology firms have plunged headlong into massive AI-centred investments (semiconductor chips, cloud infrastructure, data centres, and LLMs), and these investments are propping up economic activity in the US.

It is becoming clear that these investments are now in bubble territory. Nothing symbolises it more than the series of audacious deals announced by OpenAI over the last month. It amounts to more than $1 trillion of computing power. Sample this

Tapping into that much capital has led OpenAI to weave deals that draw on the financial resources of other Big Tech companies, adding to a growing web of financial dependencies across the AI world. In the process, it could be helping to create a new level of systemic risk in an industry that may already have entered bubble territory… the deal with chipmaker AMD… could eventually result in OpenAI buying enough chips to require six gigawatts of electric power, three times the capacity of the Hoover dam. Each gigawatt of new computing capacity is generally assumed to require capital investment of about $50bn, of which some two-thirds could flow to AMD to pay for chips.

OpenAI, however, has only placed a firm order for the first gigawatt, and it is not clear how much of this deal — or other parts of its mammoth spending spree — will ever be fully realised. The arrangement also came with an unusual sweetener that could lead to AMD in effect giving OpenAI about 10 per cent of its stock, currently worth $36bn… One challenge has been weighing the odds that these and other megadeals will ever be fully consummated. Among the unknowns: whether demand for AI services will be strong enough to justify building all the data centres, whether the new facilities can be financed, built and equipped, and whether there will be enough electricity to power them.

And there are remarkable parallels with previous bubbles.

Nvidia has become “the central bank of AI, they’re the lender of last resort”, says Charles Fitzgerald, a tech investor and former Microsoft executive. Taking equity from vendors, and using the money to support further borrowings, has made the AI boom dependent on a high level of convoluted financial engineering, he adds. The circularity has also prompted questions about how sustainable the revenues will turn out to be. It echoes arrangements that were a common feature of the dotcom bubble at the end of the 1990s, says Bill Janeway, a former chair of investment firm Warburg Pincus. Back then, an enterprise software company might have paid to advertise with a new internet media company, in return for the media company buying its software. That artificial arrangement would have created the illusion of stronger demand for both companies’ services, adds Janeway. In the closest parallel to today’s AI infrastructure boom, telecom equipment companies such as Lucent and Nortel advanced money to customers in the 1990s to buy equipment, only to face write-offs when a wave of bankruptcies hit the industry.

All these deals are now only on paper and in very nascent stages. The model will become operational when it can secure financial backers. And this will invariably create systemic risks in the financial markets.

The nature of the investments in data centres and cloud infrastructure is such that they are medium to long-term in nature. Once built, the presumption must be that demand will materialise. But if demand does not or tanks over time, then the whole model will crumble. No amount of risk mitigation or ingenuity in financing structures can address this scenario. Not even the massive cash surpluses and strong balance sheets of the Big Tech firms and chip makers can insulate the economy from the impact of the shocks. And if this risk materialises, given the amounts involved, it’s certain to have very large financial market and economy-wide impacts. The biggest and most immediate disruption will be on the equity markets, which have bid up the prices of all the companies involved deep into bubble territory. And this disruption can cascade economy-wide through the balance sheets of corporates, financiers, and households.

If we turn to the economic impact of AI, a Pantheon Macroeconomics report informs that the US GDP would have grown a mere 0.6 per cent annualised in the first half of 2025 if not for AI-related spending, half the actual rate. It also found that total private fixed investment, which rose by 3 per cent Y-o-Y in the second quarter, would have fallen by around 1.5 per cent if not for AI-related components.

Ruchir Sharma captured the importance of AI in propping up the US economy, provocatively describing it as “one big AI bet”.

The hundreds of billions of dollars companies are investing in AI now account for an astonishing 40 per cent share of US GDP growth this year… AI companies have accounted for 80 per cent of the gains in US stocks so far in 2025… But without all the excitement around AI, the US economy might be stalling out, given the multiple threats… The main reason AI is regarded as a magic fix for so many different threats is that it is expected to deliver a significant boost to productivity growth, especially in the US. Higher output per worker would lower the burden of debt by boosting GDP. It would reduce demand for labour, immigrant or domestic. And it would ease inflation risks, including the threat from tariffs, by enabling companies to raise wages without raising prices.

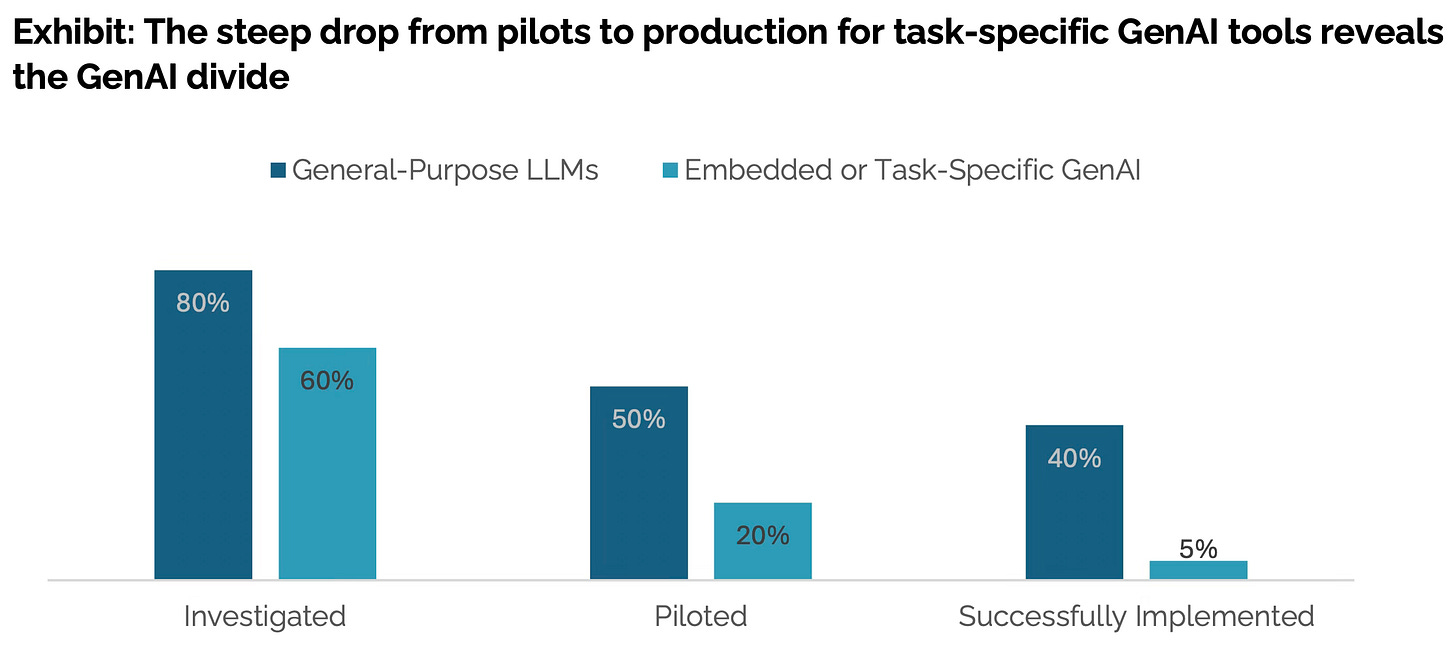

But there’s a growing chorus of opinion casting doubts on the impact of AI, at least for now. A recent McKinsey reportsaid, “GenAI is everywhere, except in company P&L”. The report points out that while nearly eight in ten companies have deployed gen AI in some form, roughly the same percentage reported no material impact on earnings. An MIT reportof July 2025 analysed 300 publicly disclosed AI implementations in 52 firms, found that just 5% of integrated AI pilots are extracting millions in value, while 95% had no measurable P&L impact.

The McKinsey report made the distinction between horizontal and vertical use cases.

Many organizations have deployed horizontal use cases, such as enterprise-wide copilots and chatbots; nearly 70 percent of Fortune 500 companies, for example, use Microsoft 365 Copilot. These tools are widely seen as levers to enhance individual productivity by helping employees save time on routine tasks and access and synthesize information more efficiently. But these improvements, while real, tend to be spread thinly across employees. As a result, they are not easily visible in terms of top- or bottom-line results.

By contrast, vertical use cases—those embedded into specific business functions and processes—have seen limited scaling in most companies despite their higher potential for direct economic impact. Fewer than 10 percent of use cases deployed ever make it past the pilot stage, according to McKinsey research. Even when they have been fully deployed, these use cases typically have supported only isolated steps of a business process and operated in a reactive mode when prompted by a human, rather than functioning proactively or autonomously. As a result, their impact on business performance also has been limited.

Echoing the McKinsey report, the MIT report shows that while 60% of surveyed firms evaluated vertical use cases, just 5% reached production.

The McKinsey report points to the promise of Agentic AI in transforming processes.

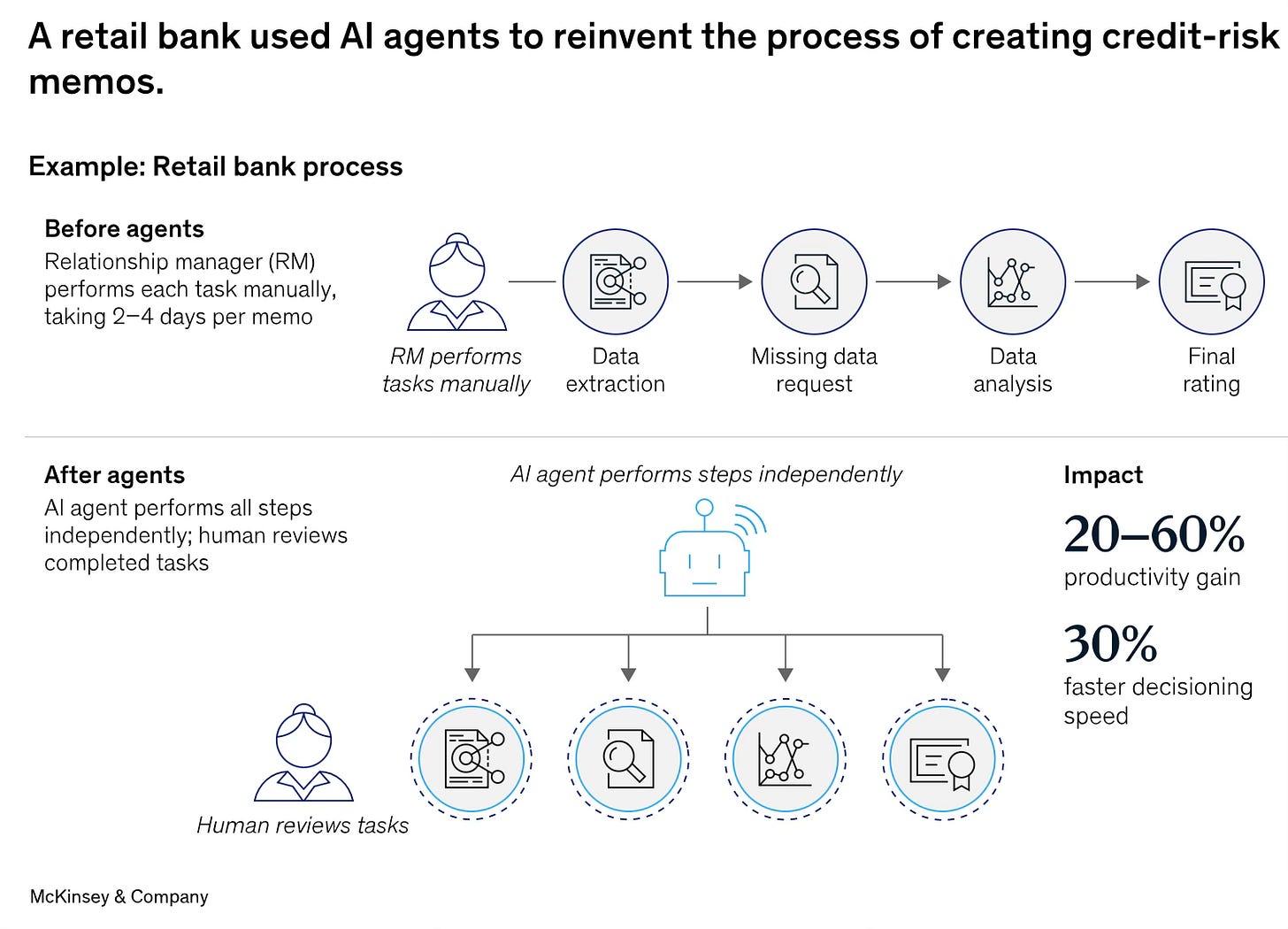

AI agents mark a major evolution in enterprise AI—extending gen AI from reactive content generation to autonomous, goal-driven execution. Agents can understand goals, break them into subtasks, interact with both humans and systems, execute actions, and adapt in real time—all with minimal human intervention… in the vertical realm, where agentic AI enables the automation of complex business workflows involving multiple steps, actors, and systems—processes that were previously beyond the capabilities of first-generation gen AI tools.

The report points to five ways in which Agents transform processes.

Agents accelerate execution by eliminating delays between tasks and by enabling parallel processing.Unlike in traditional workflows that rely on sequential handoffs, agents can coordinate and execute multiple steps simultaneously, reducing cycle time and boosting responsiveness. Agents bring adaptability. By continuously ingesting data, agents can adjust process flows on the fly, reshuffling task sequences, reassigning priorities, or flagging anomalies before they cascade into failures… Agents enable personalization. By tailoring interactions and decisions to individual customer profiles or behaviors, agents can adapt the process dynamically to maximize satisfaction and outcomes. Agents bring elasticity to operations. Because agents are digital, their execution capacity can expand or contract in real time depending on workload, business seasonality, or unexpected surges—something difficult to achieve with fixed human resource models. Agents also make operations more resilient. By monitoring disruptions, rerouting operations, and escalating only when needed, they keep processes running—whether it’s supply chains navigating port delays or service workflows adapting to system outages.

The report has illustrative case studies of vertical use cases.

And this.

The challenge with the AI-based automation of vertical use cases is that it requires significant customisation through iterative adaptation to be ready for deployment in live settings with their business risks. The IT revolution over the last three decades was about figuring out business process automation. While it automated workflows, the larger business task (which consisted of several such processes) itself was managed by human workers and supervisors, who exercised judgment and made decisions in completing the task. Besides, they could step in an address deficiencies in the digitised workflows.

Agentic AI now seeks to automate tasks itself. It outsources the decision-making to the Agents. Unlike logic-driven processes, decision-making involves the exercise of judgment that goes beyond logical processing. It is a far more complex task, even on mundane tasks. This requires a much higher order of accuracy and reliability, and embedding of much more rigorous safeguards and internal controls.

As an illustration, the recent instance of Deloitte being forced to apologise and refund the Australian government after its 237-page report on the review of the government’s welfare compliance systems was found riddled with references to sources and experts that did not exist. The report was apparently written using AI, and raises serious questions about the credibility of AI-assisted consulting.

Task automation, therefore, requires intense iteration and adaptation of the Agent over longer time frames, before its business deployment. This automation process, in turn, requires considerable human engagement of a very high quality, with significant domain experience, and over an extended period. Even the biggest firms will struggle to mobilise and concentrate such talent over long periods. And this requirement then raises questions about the economics of task automation.

But it may only be a matter of time before at least some of these problems are overcome and vertical use cases of task automation emerge and become mainstream. But even then, it remains to be seen as to what proportion of human judgment can be outsourced and automated. I’m inclined to believe that all decisions other than simple logic-driven ones will continue to remain in the human realm. However, AI is likely to become an important contributor in helping their human managers become more effective in their decision-making.

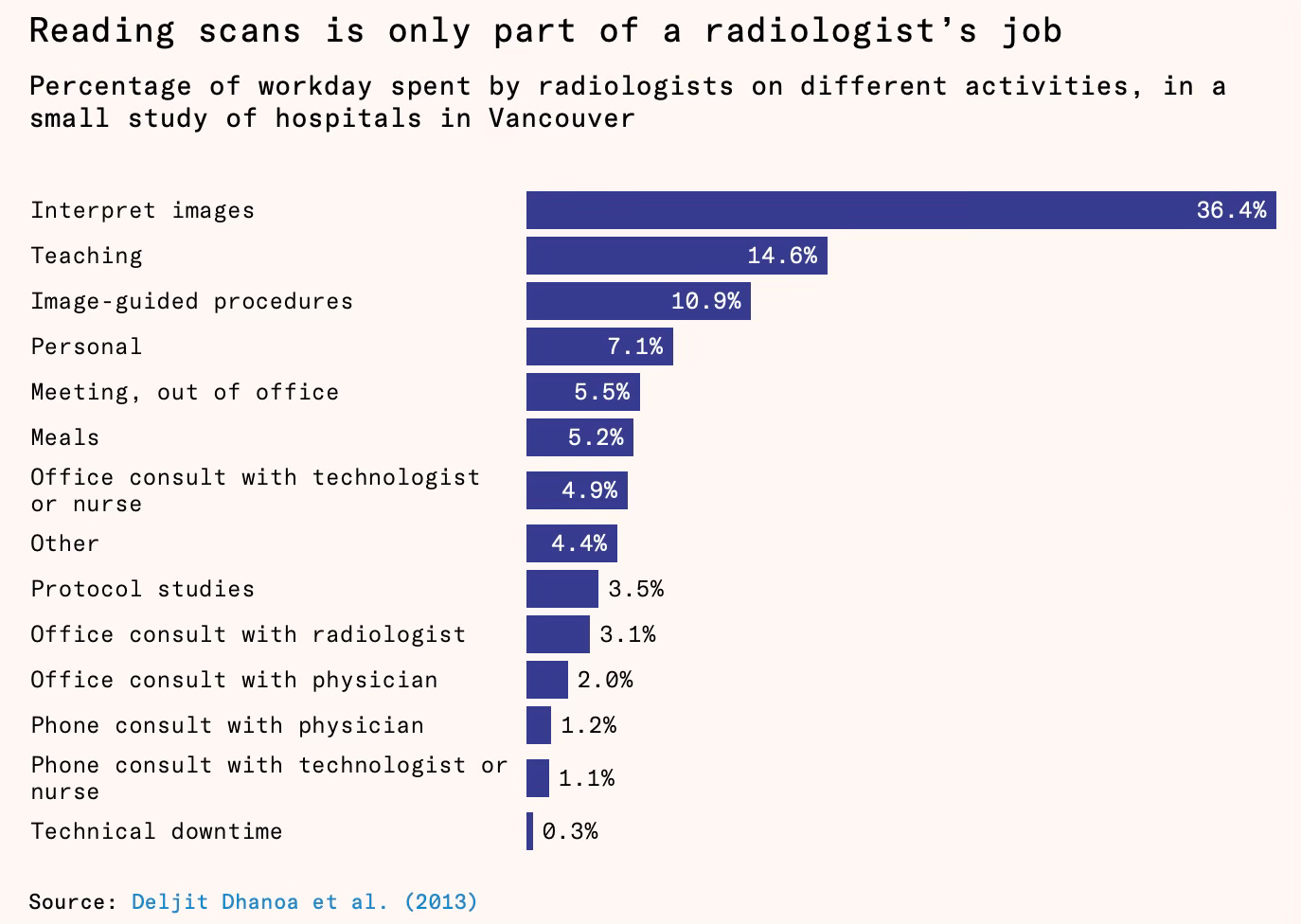

In this context, Deena Mousa examined the impact of AI on radiology, “a field optimised for human replacement, where digital inputs, pattern recognition tasks, and clear benchmarks predominate”. In fact, in 2016, Geoffrey Hinton, widely acknowledged as the father of AI, had declared that “people should stop training radiologists now”. However, in reality, the US diagnostic radiology residency programs not only offered a record 1208 positions in 2025, up 4% from 2024, but the field’s vacancy rates are at all-time highs, and radiology was the second-highest paid medical speciality in the US.

Mousa concludes that far from being the canary in the coalmine, radiology shows that replacing humans with AI is harder than it seems. She highlights three reasons.

First, while models beat humans on benchmarks, the standardized tests designed to measure AI performance, they struggle to replicate this performance in hospital conditions. Most tools can only diagnose abnormalities that are common in training data, and models often don’t work as well outside of their test conditions. Second, attempts to give models more tasks have run into legal hurdles: regulators and medical insurers so far are reluctant to approve or cover fully autonomous radiology models. Third, even when they do diagnose accurately, models replace only a small share of a radiologist’s job. Human radiologists spend a minority of their time on diagnostics and the majority on other activities, like talking to patients and fellow clinicians…

Even with hundreds of imaging algorithms approved by the Food and Drug Administration (FDA) on the market, the combined footprint of today’s radiology AI models still cover only a small fraction of real-world imaging tasks. Many cluster around a few use cases: stroke, breast cancer, and lung cancer together account for about 60 percent of models, but only a minority of the actual radiology imagingvolume that is carried out in the US.

Multi‑task foundation models may widen coverage, and different training sets could blunt data gaps. But many hurdles cannot be removed with better models alone: the need to counsel the patient, shoulder malpractice risk, and receive accreditation from regulators. Each hurdle makes full substitution the expensive, risky option and human plus machine the default.

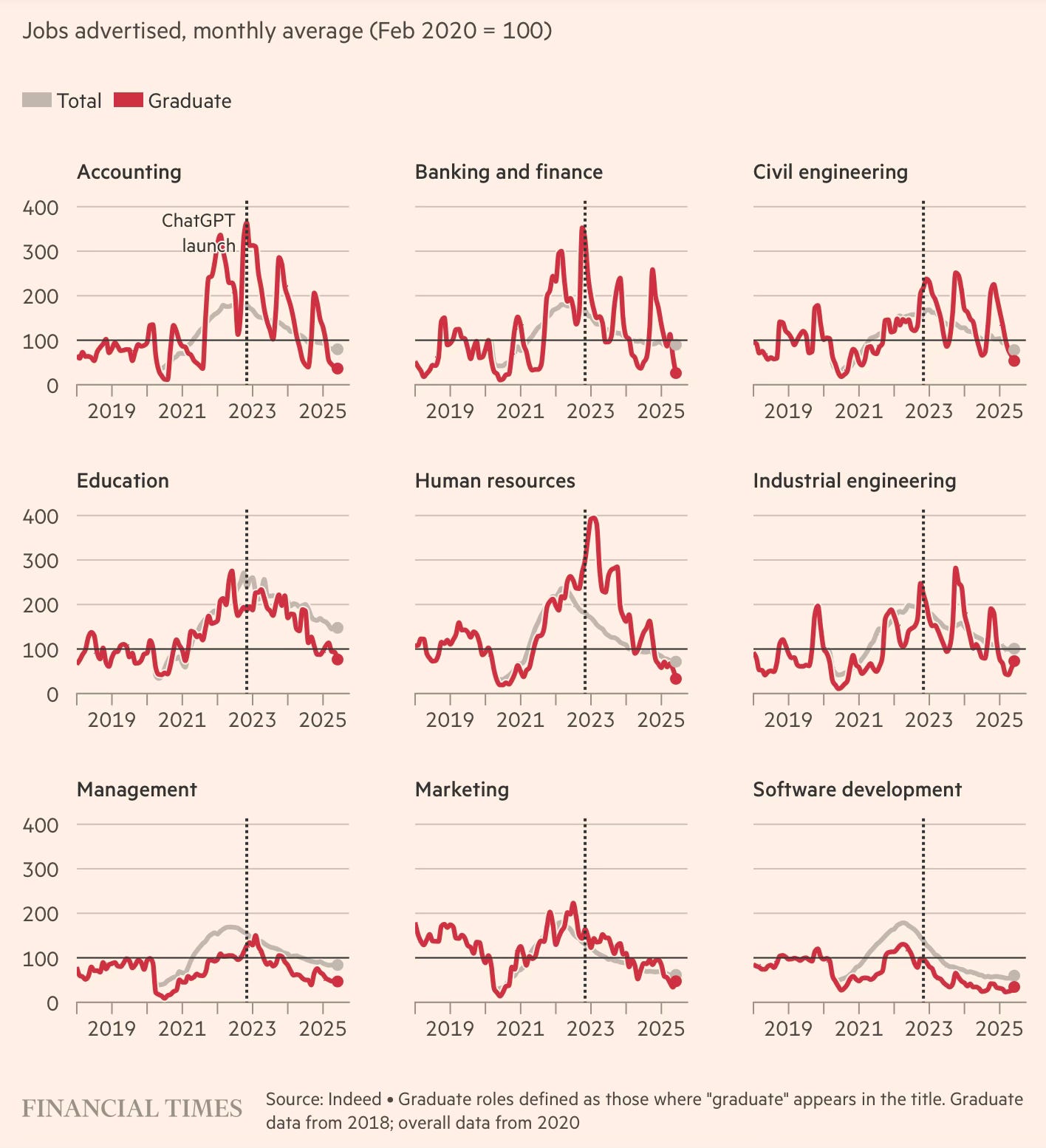

The impact of Gen AI on the labour market, too, is a matter of debate. A recent FT long read found that while job listings for graduate roles are down across sectors, the trend is secular and does not discriminate between sectors identified as being less and more vulnerable to Gen AI. This from UK job listings.

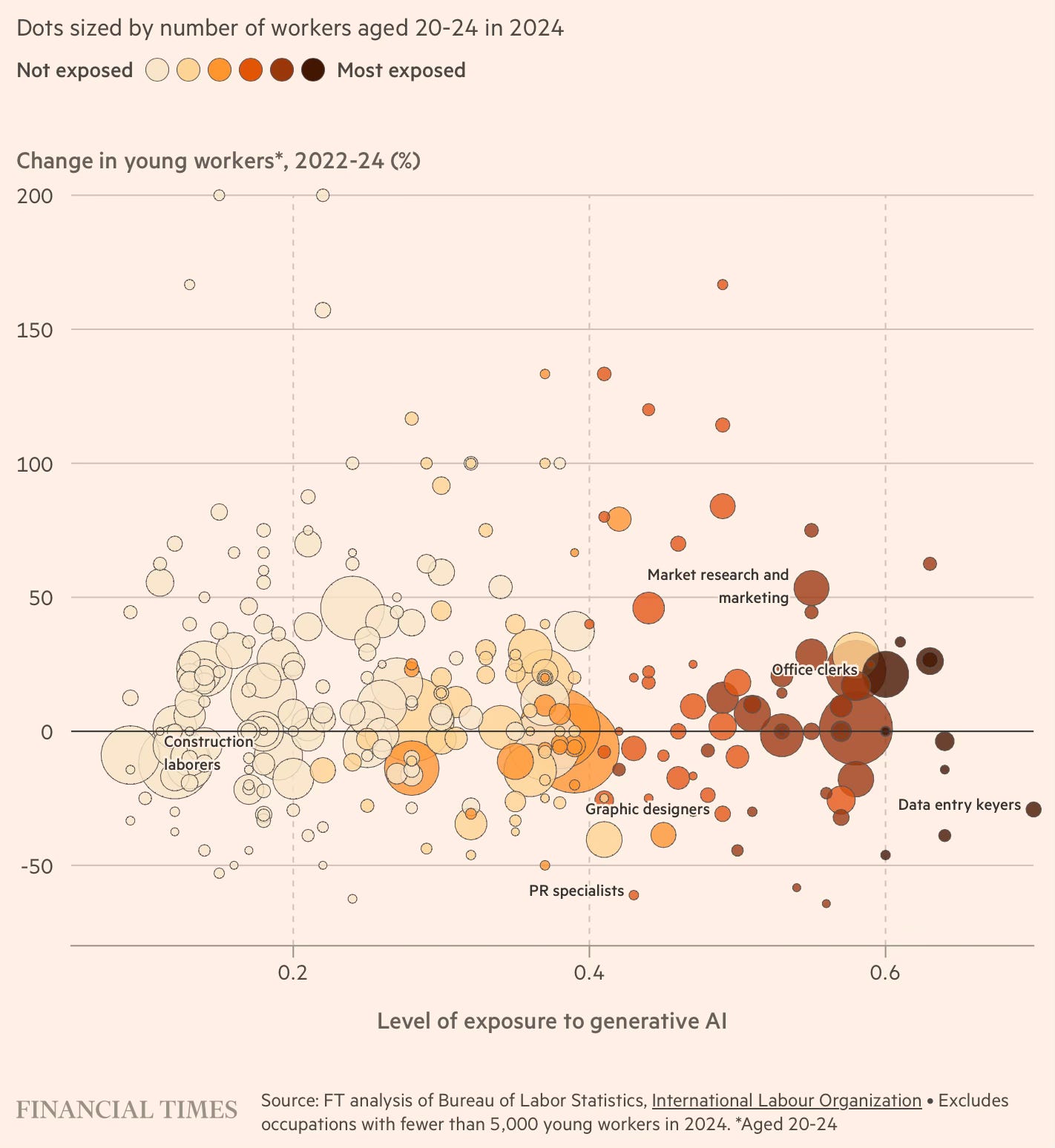

A similar analysis of US jobs at high risk from Gen AI does not show that they have shed young workers in a greater degree since the launch of ChatGPT in 2022.

In general, entry-level jobs have tended to track the wider labour market trends.

All these trends are likely to reverse in the coming years. But predicting the extent of reversal and the outcomes is a pure gamble.

While the economic impacts are a matter of debate, it’s more likely that the social impacts of AI will be much more pronounced. The rise of the digital economy with the internet, communication tools, and social media platforms has transformed our social lives and increased convenience. AI will build on it and is likely to have similarly transformative impacts, for the better or worse. For example, the negative and positive possibilities with a video generator App like OpenAI’s Sora on society and politics are enormous.

No comments:

Post a Comment